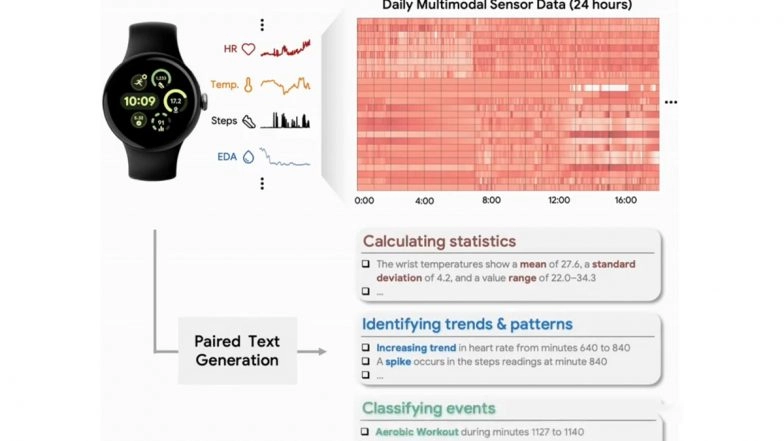

Google Research shared a post on X (formerly Twitter) on July 29, 2025, and announced the introduction of SensorLM, a new family of sensor-language foundation models. These models can help wearable data “speak” for itself to make the wearable data understandable in natural language. SensorLM was trained on around 59.7 million hours of multimodal sensor data collected from over 1,03,000 individuals. Google said, “We evaluated SensorLM on a wide range of real-world tasks in human activity recognition and healthcare. The results demonstrate significant advances over previous state-of-the-art models.” SensorLM sets a new benchmark in sensor data interpretation, which can generate human-readable descriptions. Andhra Pradesh CM N Chandrababu Naidu Seeks AI Singapore’s Support To Establish AI Research and Innovation Centres in State.

Google SensorLM

Let your wearable data "speak" for itself! Introducing SensorLM, a family of sensor-language foundation models trained on ~60 million hours of data, enabling robust wearable data understanding with natural language. → https://t.co/1vL6df5pMa pic.twitter.com/NxqQ58f1Bl

— Google Research (@GoogleResearch) July 28, 2025

(SocialLY brings you all the latest breaking news, fact checks and information from social media world, including Twitter (X), Instagram and Youtube. The above post contains publicly available embedded media, directly from the user's social media account and the views appearing in the social media post do not reflect the opinions of LatestLY.)

Quickly

Quickly